This content is 15 years old. I don't routinely update old blog posts as they are only intended to represent a view at a particular point in time. Please be warned that the information here may be out of date.

Late last year I questioned the wisdom of trusting critical data to the cloud and cited Google Mail as an example. Whilst the Google Mail service is generally reliable, there have been some well-publicised instances of failure (including data loss). I shouldn’t be too alarmed by that – for many things in life you get what you pay for and I pay Google precisely nothing (although they do get to build up a pretty good profile of my interests against which to target advertising…). So, dusting off the motto from my Scouting days (“Be Prepared”), I set about creating a regular backup of my Google Apps mail – just in case it ever ceased to exist!

I already use the Apple Mail application (mail.app) for IMAP access but I have some concerns about mail.app – it’s failed to send messages (and not stored a draft either) on at least two occasions and basically I don’t trust it! But using Mac OS X (derived from BSD Unix) means that I also have access to various Unix tools (e.g. getmail) and that means I can take a copy of my Google Mail and store it in maildir or mbox format for later retrieval, on a schedule that I set.

The first step is to install some Unix tools on the Mac. I chose DarwinPorts (also known as MacPorts). After running the 1.7.0 installer, I fired up a terminal and entered the following commands:

su - Administrator

cd /opt/local/bin

sudo ./port -d selfupdate

This told me that my installation of MacPorts was already current, so set about installing the getmail port:

sudo ./port install getmail

The beauty of this process is that it also installed all the prerequisite packages (expat, gperf, libiconv, ncursesw, ncurses, gettext and python25). Having installed getmail, I followed George Donnelly’s advice to create a hidden folder for getmail scripts and a maildir folder for my GmailArchive – both inside my home directory:

mkdir ~/.getmail

mkdir ~/GmailArchive/ ~/GmailArchive/new ~/GmailArchive/tmp ~/GmailArchive/cur

I then created and edited a getmail configuration file at ~/.getmail/getmailrc.mygmailaccount) and entering the following settings:

[retriever]

type = SimpleIMAPSSLRetriever

server = imap.gmail.com

username = googleaccountname

password = googleaccountpassword

[destination]

type = Maildir

path = ~/GmailArchive/

[options]

verbose = 2

received = false

delivered_to = false

message_log = ~/.getmail/gmail.log

I tested this by running:

/opt/local/bin/getmail -ln --rcfile getmailrc.gmailarchive

but was presented with an error message:

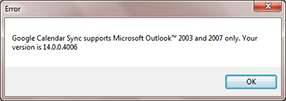

Configuration error: SSL not supported by this installation of Python

That was solved by running:

sudo ./port install py25-socket-ssl

(which installed zlib, openssl and py25-socket-ssl), after which I could re-run the getmail command and watch as my terminal session was filled with messages being downloaded (and the folder at ~/GmailArchive/new started to fill up). Then I saw a problem – even though I have a few thousand messages, I noticed that getmail was only ever downloading the contents of my Inbox.

Eventually, I solved this by adding the following line to the [retriever] section of the getmail configuration file:

mailboxes = ("[Google Mail]/All Mail",)

This took a while to work out because many blog posts on the subject suggest that the mailbox name will include [GMail] but I found I needed to use [Google Mail] (I guess that could be the difference between GMail and the Google Mail service provided as part of Google Apps). After making the change I was able to download a few thousand messages, although it took a few tries (the good news is that getmail will skip messages it has already retrieved). Strangely, although the Google Mail web interface says that there are 3268 items in my All Mail folder, getmail finds 5320 (and, thankfully, doesn’t seem to include the spam, which would only account for 1012 of the difference anyway).

In addition, the getmail help text explains that multiple mailboxes may be selected by adding to the tuple of quoted strings but, if there is just a single value, a trailing comma is required.

Having tested manual mail retrieval, I set up a cron job to retrieve mail on a schedule. Daily would have been fine for backup purposes but I could also schedule a more frequent job to pull updates every few minutes:

crontab -e

launched vim to edit the cron table and I added the following line:

4,14,24,34,44,54 * * * * /opt/local/bin/getmail -ln --rcfile getmailrc.gmailarchive

I then opened up a terminal window and (because running lots of terminal windows makes me feel like a real geek) ran:

tail -f ~/.getmail/gmail.log

to watch as messages were automatically downloaded every 10 minutes at 4, 14, 24, 34, 44, and 54 minutes past the hour.

This also means that I get 6 messages an hour in my the local system mailbox (/var/mail/username) to tell me how the cron job ran so I chose to disable e-mail alerting for the cron job by appending >/dev/null 2>&1 to the crontab entry.

Many of the posts on this subject suggest using POP to download the mail, but Google limits POP transfers so it will require multiple downloads. Peng.u.i.n writes that IMAP should help to alleviate this (although that wasn’t my experience). He also suggests using several mbox files (instead of a single mbox file or a maildir) to backup mail (e.g. one file per calendar quarter) and Matt Cutts suggests backing up to mbox and maildir formats simultaneously:

[destination]

type = MultiDestination

destinations = (’[mboxrd-destination]‘, ‘[maildir-destination]‘)

[mboxrd-destination]

type = Mboxrd

path = ~/GmailArchive.mbox

[maildir-destination]

type = Maildir

path = ~/GmailArchive/

If you do decide to use a mbox file, then it will need to be created first using:

touch ~/GmailArchive.mbox

In Chris Latko’s post on pulling mail out of Gmail and retaining the labels, he describes some extra steps, noteably that the timestamps on mail are replaced with the time it was archived, so he has a PHP script to read each message and restore the original modification time.

Aside from the MacPorts installation, the process is the same on a Unix/Linux machine and, for Windows users, Gina Trapani has written about backing up GMail using fetchmail with Cygwin as the platform.

A few weeks ago,

A few weeks ago,