Earlier this week I saw a tweet from the MIX 2011 conference that highlighted how Microsoft’s Office 365 software as a service platform runs entirely on their Hyper-V hypervisor.

There are those (generally those who have a big investment in VMware technologies) who say Microsoft’s hypervisor lacks the features to make it suitable for use in the enterprise. I don’t know how much bigger you have to get than Office 365, but the choice of hypervisor is becoming less and less relevant as we move away from infrastructure and concentrate more on the platform.

Even so, now that Hyper-V has reached the magical version 3 milestone (at which people generally start to accept Microsoft products) I thought it was worth a post to look back at where Hyper-V has come from, and where it’s at now:

- Windows Server 2008 shipped in February 2008 with a beta version of Hyper-V and the final hypervisor was released as a Windows update in July 2008. (System Center Virtual Machine Manager followed in October 2008).

- Windows Server 2008 R2 shipped in July 2009, including a new version of Hyper-V with live migration capabilities (SCVMM 2008 R2 followed in September 2009).

- Windows Server 2008 R2 Service Pack 1 includes the third Hyper-V release, bringing new dynamic memory and RemoteFX capabilities. (At around the same time, SCVMM 2008 R2 was updated to support Hyper-V v3Â and the SCVMM 2012 beta was announced).

- Windows 7 and Windows Server 2008 R2 have been awarded Common Criteria Certification at EAL4+.

- There are two ways to get Hyper-V – the free Hyper-V Server product (which shipped with SP1 included earlier this week) and the Hyper-V role in Windows Server 2008 R2. Since Windows Server 2008 R2, both versions have been identical in capabilities – the difference is that Hyper-V Server is based on the server core role in Windows (i.e. limited GUI) and comes with no virtualisation usage rights.

- In terms of supported guest operating systems, support means that Microsoft will fix the hypervisor or the guest operating system. Microsoft supports:

- Windows 2003 SP2/XP SP3 or later, but some custom support agreements go back to 2000 SP4.

- SLES 10 SP3 – 11 with an appropriate support agreement.

- RHEL 5.2-5.5 – with an appropriate support agreement.

- Virtual machine additions are provided for these operating systems. Other operating systems will run, but in emulation mode.

- Microsoft is also contributing code into the Linux driver repository.

Looking at some of the technical features:

- Dynamic memory requires Windows 2003 SP2 or later (and is not yet supported for Linux guests). It’s important to understand the differences between over subscription and over commitment.

- Performance is as close as no difference for differentiator between hypervisors.

- Hyper-V uses Windows clustering for high availability – the same technology as is used for live migration.

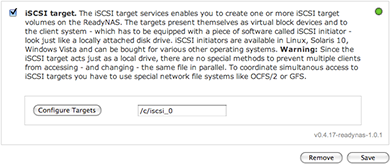

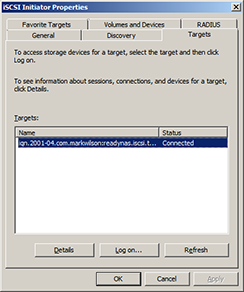

- In terms of storage scalability – it’s up to the customer to choose how to slice/dice storage – with partner support for multipathing, hardware snapshotting, etc. Hyper-V users can have 1 LUN for each VM, or for 1000 VMs (of course, no-one would actually do this).

- Networking also uses the partner ecosystem – for example HP creates software to allow NIC teaming on its servers, and Hyper-V can use a virtual switch to point to this.

- In terms of data protection, the volume shadow copy service on the host is used an there are a number of choices to make around agent placement. A single agent can be deployed to the host, with all guests protected (allowing whole machine recovery) or guests can have their own agents to allow backups at the application level (for Exchange, SQL Server, etc.).

I’m sure that competitor products may have a longer list of features but in terms of capability, Hyper-V is “good enough” for most scenarios I can think of – I’d be interested to hear what barriers to enterprise adoption people see for Hyper-V?