I had an interesting discussion with a colleague today, who was arguing that there is no such thing as private cloud – it’s just virtualisation, rebranded.

Whilst I agree with his sentiment (many organisations claiming to have implemented private clouds have really just virtualised their server estate), I do think that private clouds can exist.

Cloud is a new business model, but the difference between traditional hosting and cloud computing is more that just commercial. The NIST definition of cloud computing is becoming more and more widely accepted and it defines five essential charactistics, three service models and four deployment models.

The essential characteristics are:

- “On-demand self-service. A consumer can unilaterally provision computing capabilities, such as server time and network storage, as needed automatically without requiring human interaction with each service provider.

- Broad network access. Capabilities are available over the network and accessed through standard mechanisms that promote use by heterogeneous thin or thick client platforms (e.g., mobile phones, tablets, laptops, and workstations).

- Resource pooling. The provider’s computing resources are pooled to serve multiple consumers using a multi-tenant model, with different physical and virtual resources dynamically assigned and reassigned according to consumer demand. There is a sense of location independence in that the customer generally has no control or knowledge over the exact location of the provided resources but may be able to specify location at a higher level of abstraction (e.g., country, state, or datacenter). Examples of resources include storage, processing, memory, and network bandwidth.

- Rapid elasticity. Capabilities can be elastically provisioned and released, in some cases automatically, to scale rapidly outward and inward commensurate with demand. To the consumer, the capabilities available for provisioning often appear to be unlimited and can be appropriated in any quantity at any time.

- Measured service. Cloud systems automatically control and optimize resource use by leveraging a metering capability at some level of abstraction appropriate to the type of service (e.g., storage, processing, bandwidth, and active user accounts). Resource usage can be monitored, controlled, and reported, providing transparency for both the provider and consumer of the utilized service.”

and NIST’s private cloud definition is:

“Private cloud. The cloud infrastructure is provisioned for exclusive use by a single organization comprising multiple consumers (e.g., business units). It may be owned, managed, and operated by the organization, a third party, or some combination of them, and it may exist on or off premises.”

If anything, the NIST definition is incomplete (it doesn’t recognise any service models beyond infrastructure-, platform- and software-as-a-service – I’d add business process as a service too) but the rest is pretty spot on.

Looking at each of the characteristics and comparing them to a simple virtualisation of existing IT:

- On demand self service: virtualisation alone doesn’t cover this – so private clouds need to include another technology layer to enable this functionality.

- Broad network access: nothing controversial there, I think.

- Resource pooling: I agree, standard virtualisation functionality.

- Rapid elasticity: this is where private cloud struggles against public (bursting to public via a hybrid solution might help, if feasible from a governance/security perspective) but, with suitable capacity management in place, private virtualised infrastructure deployments can be elastic.

- Measured service: again, an additional layer of technology is required in order to provide this functionality – more than just a standard virtualised solution.

All of this is possible to achieve internally (i.e. privately), and it’s important to note that it’s no good just porting existing applications to a virtualisaed infrastructure – they need to be re-architected to take advantage of these characteristics. But I’m pretty sure there is more to private cloud than just virtualisation with a new name…

As for, whether there is a long term place for private cloud… that’s an entirely separate question!

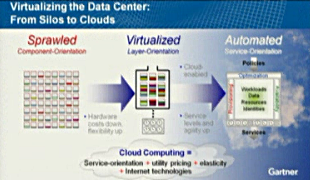

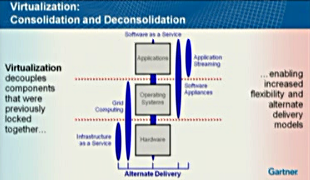

Virtualisation is not really about consolidation. It’s actually the decoupling of components that were previously combined – the application, operating system and hardware – to provides some level of abstraction. A hypervisor is just a service provider for compute resource to a virtual machine. Decoupling is only one part of what’s happening though as the services may be delivered in different ways – what Gartner describes as

Virtualisation is not really about consolidation. It’s actually the decoupling of components that were previously combined – the application, operating system and hardware – to provides some level of abstraction. A hypervisor is just a service provider for compute resource to a virtual machine. Decoupling is only one part of what’s happening though as the services may be delivered in different ways – what Gartner describes as