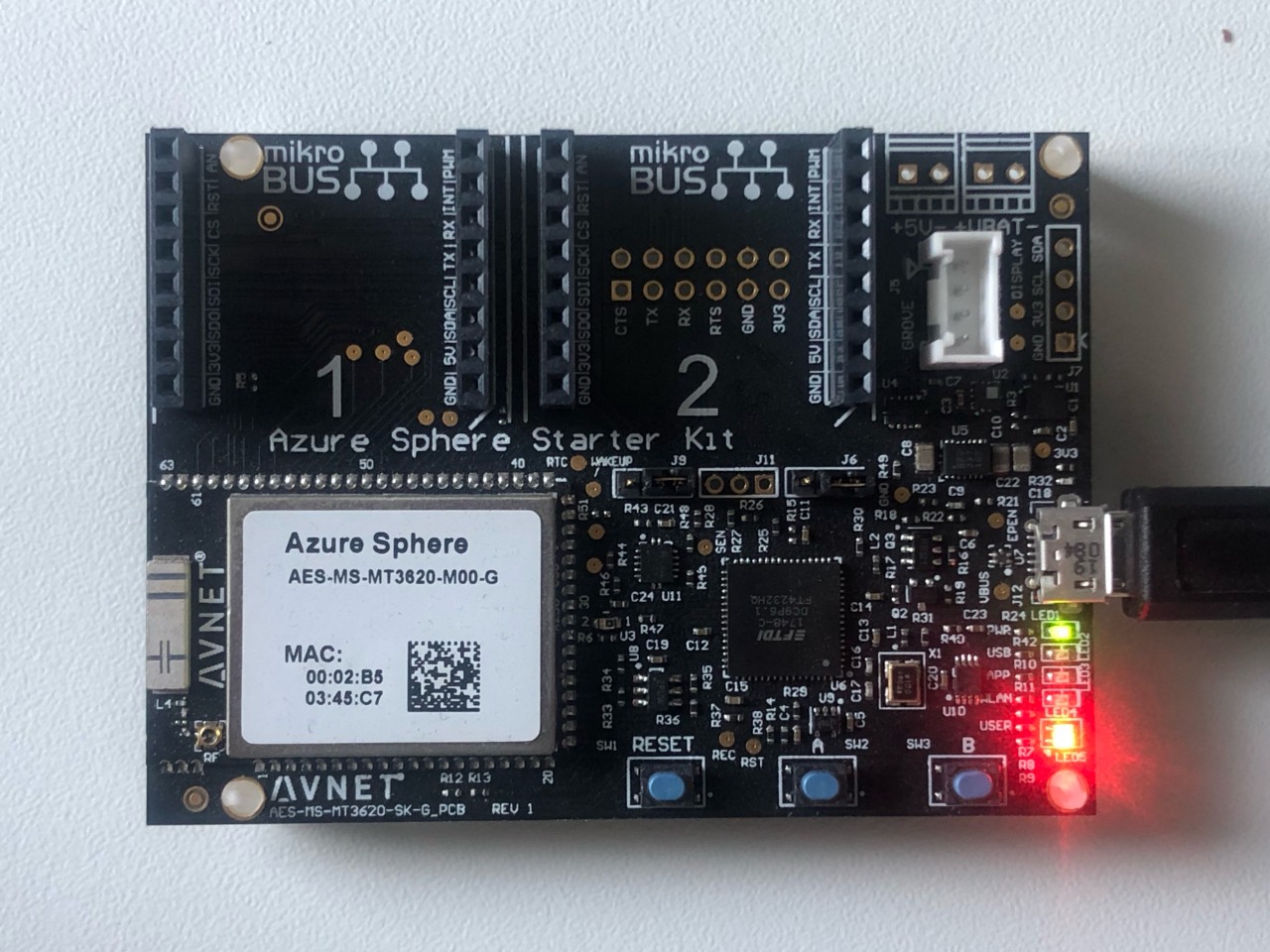

Last week, I wrote about my experiences getting some sample code running on an Avnet Azure Sphere Starter Kit. That first post walked through installing the SDK, setting up my development environment (I chose to use Visual Studio Code), configuring the device (including creating a tenant, claiming the device, connecting the device to Wi-Fi, and updating the OS), and downloading and deploying a sample app.

Since then, I’ve managed to make some steps forward with the Element 14 out of the box demo by Brian Willess (part 1, part 2 and part 3). Rather than repeat Brian’s posts, I’ll focus on what I did to work around a few challenges along the way.

Working around compiler errors in Visual Studio Code using the command line

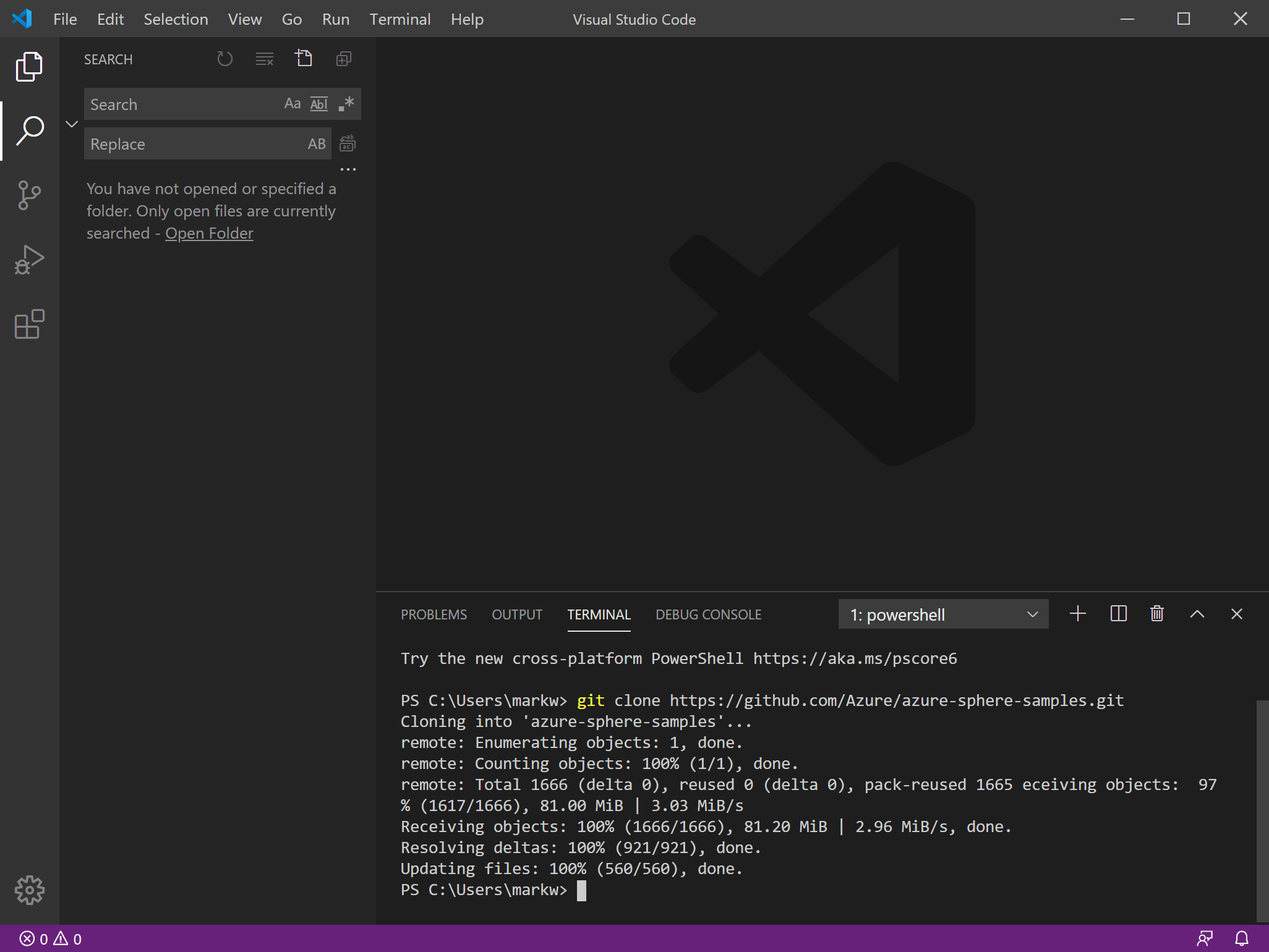

My first issue was that the Element 14 blogs are based on Visual Studio – not Visual Studio Code and I was experiencing issues where Code would complain it couldn’t find a compiler.

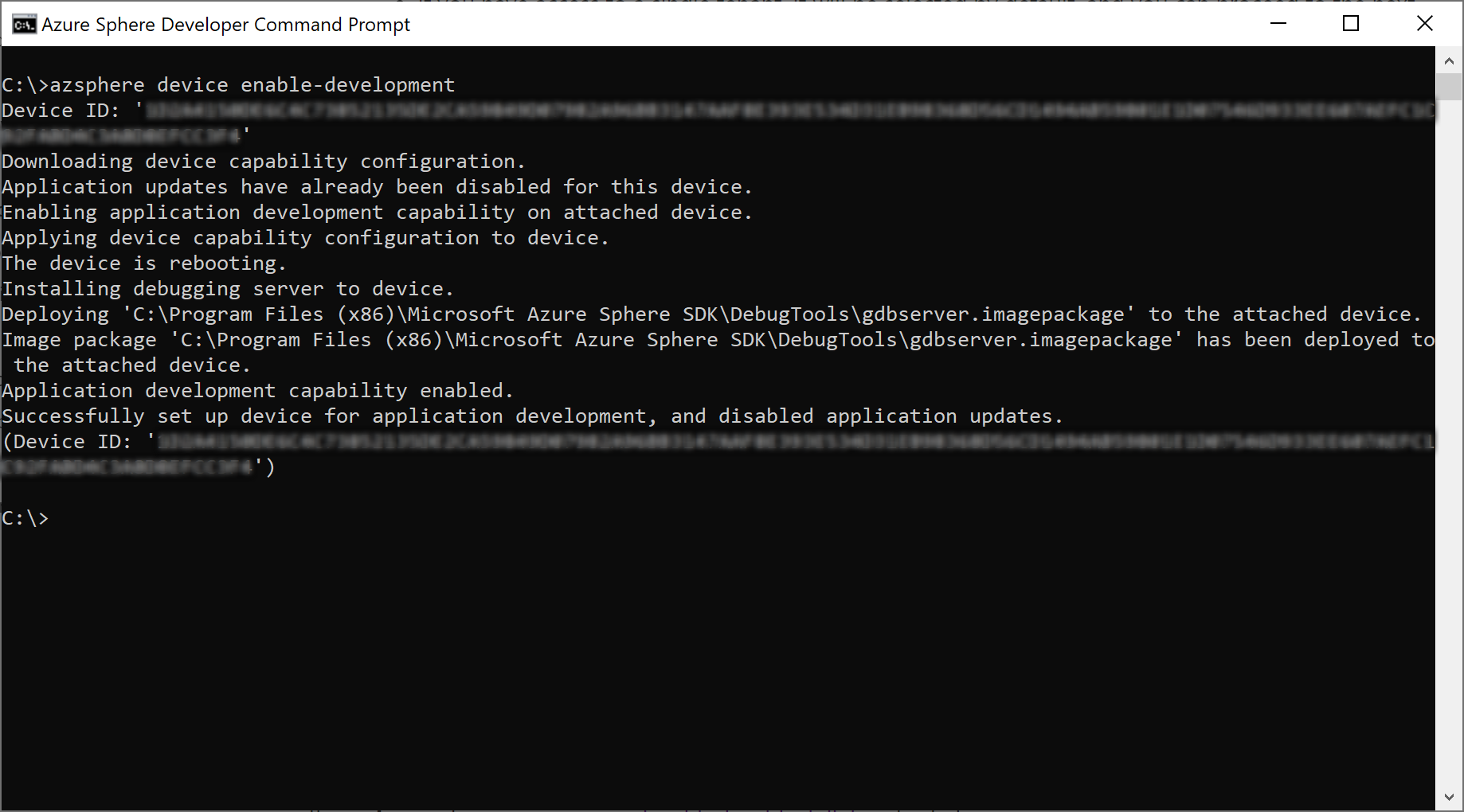

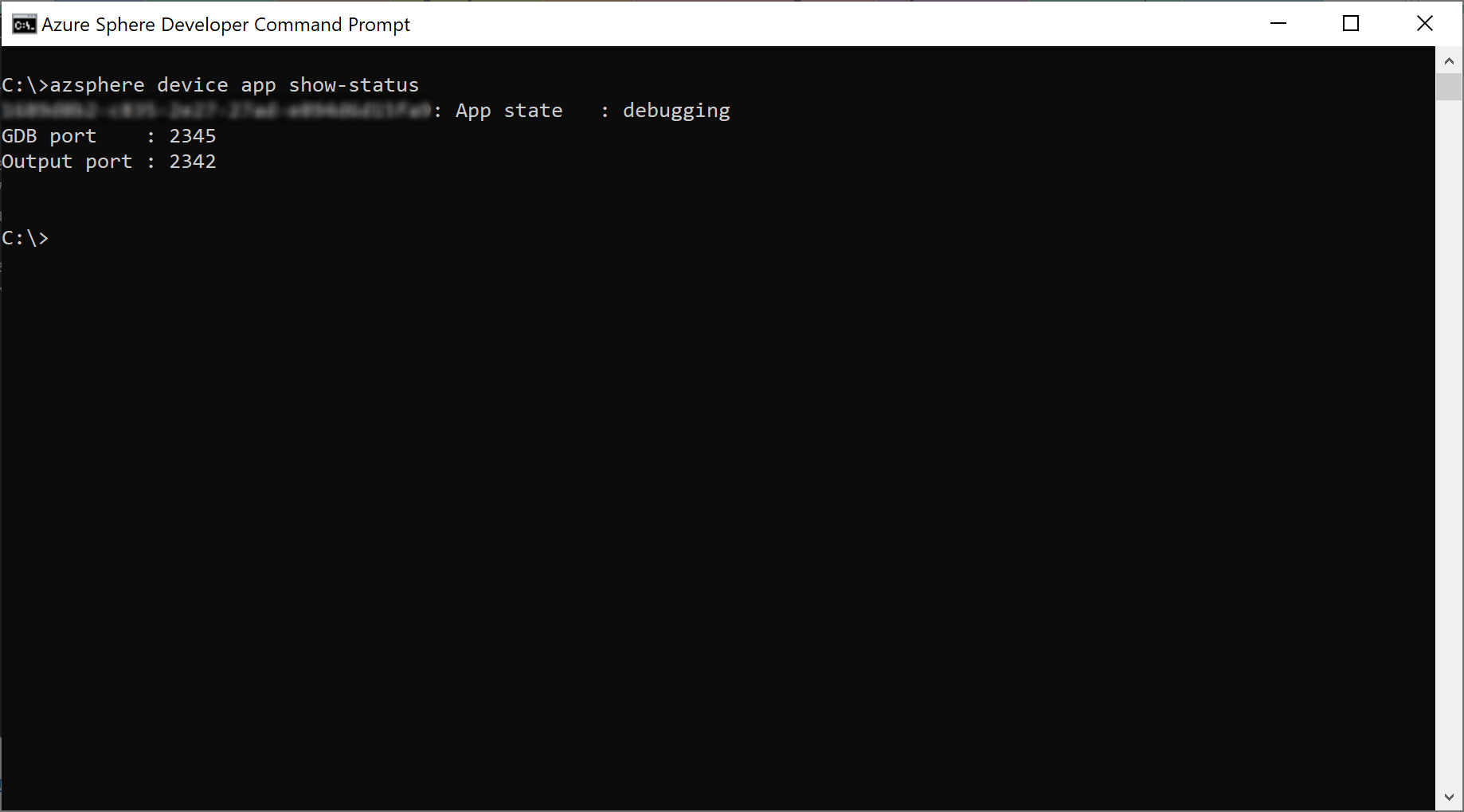

Thanks my colleague Andrew Hawker who was also experimenting with his Starter Kit, but using a Linux VM, I had a workaround. That workaround was to run CMake and Ninja from the command line, then to sideload the resulting app package onto the device from the Azure Sphere Developer Command Prompt:

cmake ^

-G "Ninja" ^

-DCMAKE_TOOLCHAIN_FILE="C:\Program Files (x86)\Microsoft Azure Sphere SDK\CMakeFiles\AzureSphereToolchain.cmake" ^

-DAZURE_SPHERE_TARGET_API_SET="4" ^

-DAZURE_SPHERE_TARGET_HARDWARE_DEFINITION_DIRECTORY="C:\Users\%username%\AzureSphereHacksterTTC\Hardware\avnet_mt3620_sk" ^

-DAZURE_SPHERE_TARGET_HARDWARE_DEFINITION="avnet_mt3620_sk.json" ^

--no-warn-unused-cli ^

-DCMAKE_BUILD_TYPE="Debug" ^

-DCMAKE_MAKE_PROGRAM="ninja.exe" ^

"C:\Users\%username%\AzureSphereHacksterTTC\AvnetStarterKitReferenceDesign"

ninja

azsphere device sideload deploy --imagepackage AvnetStarterKitReferenceDesign.imagepackage

I wasn’t able to view the debug output (despite my efforts to use PuTTY to read 192.168.35.2:2342) but I was confident that the app was working on the device so moved on to integrating with cloud services.

Brian Willess has since updated the repo so it should now work with Visual Studio Code (at least for the high level application) and I have successfully tested the non-connected scenario (part 1) with the changes.

Integration with Azure IoT Hub, device twins and Azure Time Series Insights

Part 2 of the series of posts I was working though is where the integration starts. The basic steps (refer to Brian Willess’ post for full details) were:

- Create an Azure IoT hub, which is a cloud-hosted back-end for secure communication with Internet of Things (IoT) devices, of which the Azure Sphere is just one of many options.

- Create and configure the IoT Hub Device Provisioning Service (DPS), including:

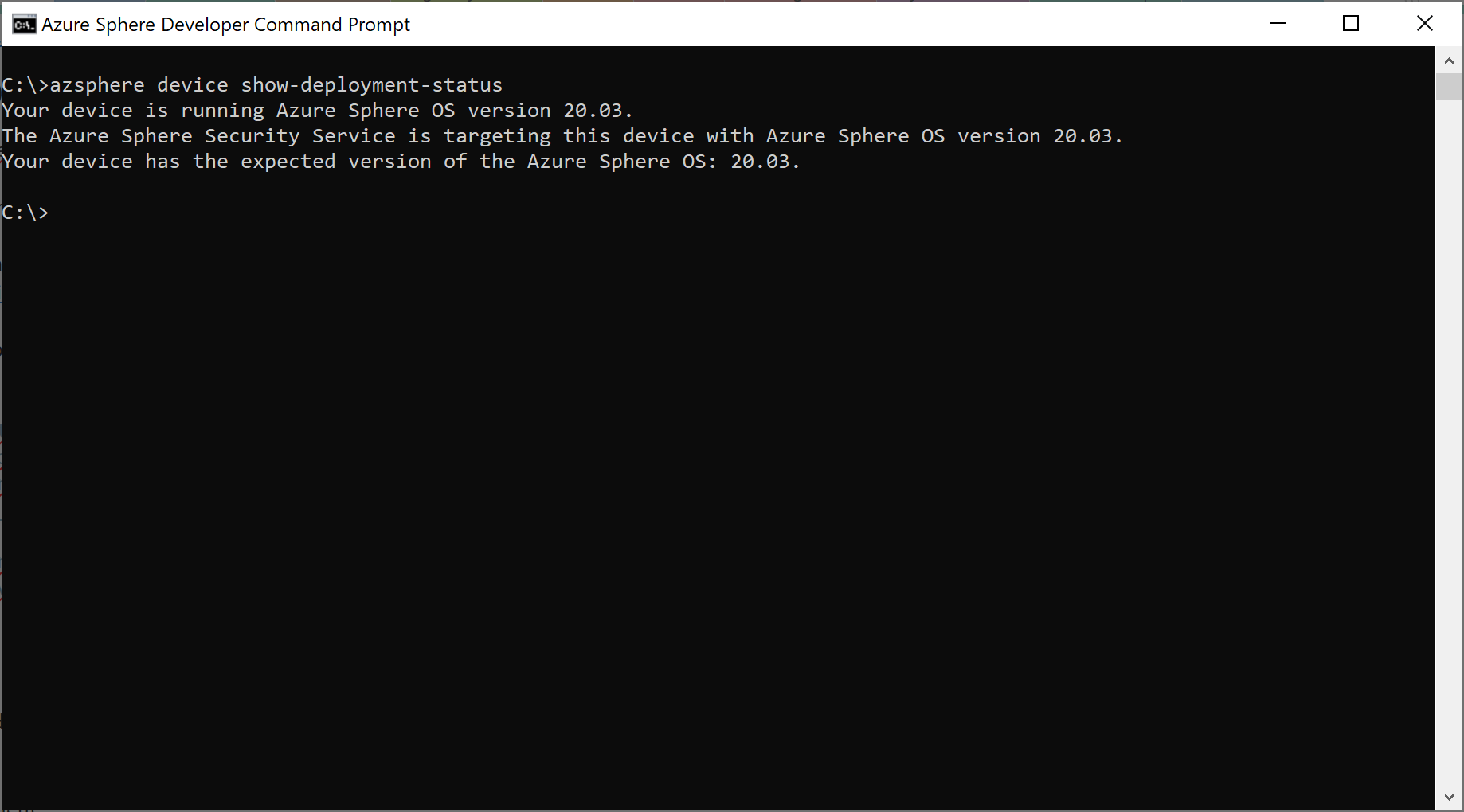

- Downloading a certificate from the Azure Sphere tenant (using

azsphere tenant download-CA-certificate --output CAcertificate.cerat the Azure Sphere Developer Command Prompt) and using this to authenticate with the DPS, including validation with the verification code generated by the Azure portal (azsphere tenant download-validation-certificate --output validation.cer --verificationcode verificationcode) and uploading the resulting certificate to the portal. - Creating an Enrollment Group, to enrol any newly-claimed device whose certificate is signed by my tenant. This stage also includes the creation of an initial device twin state, editing the JSON to include some extra lines:

"userLedRed": false,"userLedGreen": false,"userLedBlue": true

- The initial blue illumination of the LED means that we can see when the Azure Sphere has successfully connected to the IoT Hub.

- Downloading a certificate from the Azure Sphere tenant (using

- Edit the application source code (I used Visual Studio Code but any editor will do) to:

- Uncomment

#define IOT_HUB_APPLICATIONin build_options.h. - Update the

CmdArgsline in app_manifest.json with the ID Scope from the DPS Overview in the Azure portal. - Update the

AllowedConnectionsline in app_manifest.json with the FQDNs from the DPS Overview (Global Device Endpoint) and the IoT Hub (Hostname) in the Azure portal. - Update the

DeviceAuthenticationline in app_manifest.json with the Azure Sphere tenant ID (which may be obtained usingazsphere tenant show-selectedat the Azure Sphere Developer Command Prompt).

- Uncomment

- Build and run the app. I used the CLI as detailed above, but this should now be possible within Visual Studio Code.

- Use the device twin capabilities to manipulate the device, for example turning LEDs on/off (though clearly there are more complex scenarios that could be used in real deployments!).

- Create a Time Series Insights resource in Azure, which is an analytics solution to turn IoT data into actionable insights.

- Create the Time Series Insights environment using the existing IoT Hub with an access policy of

iothubownerand consumer group of$Default.

- Create the Time Series Insights environment using the existing IoT Hub with an access policy of

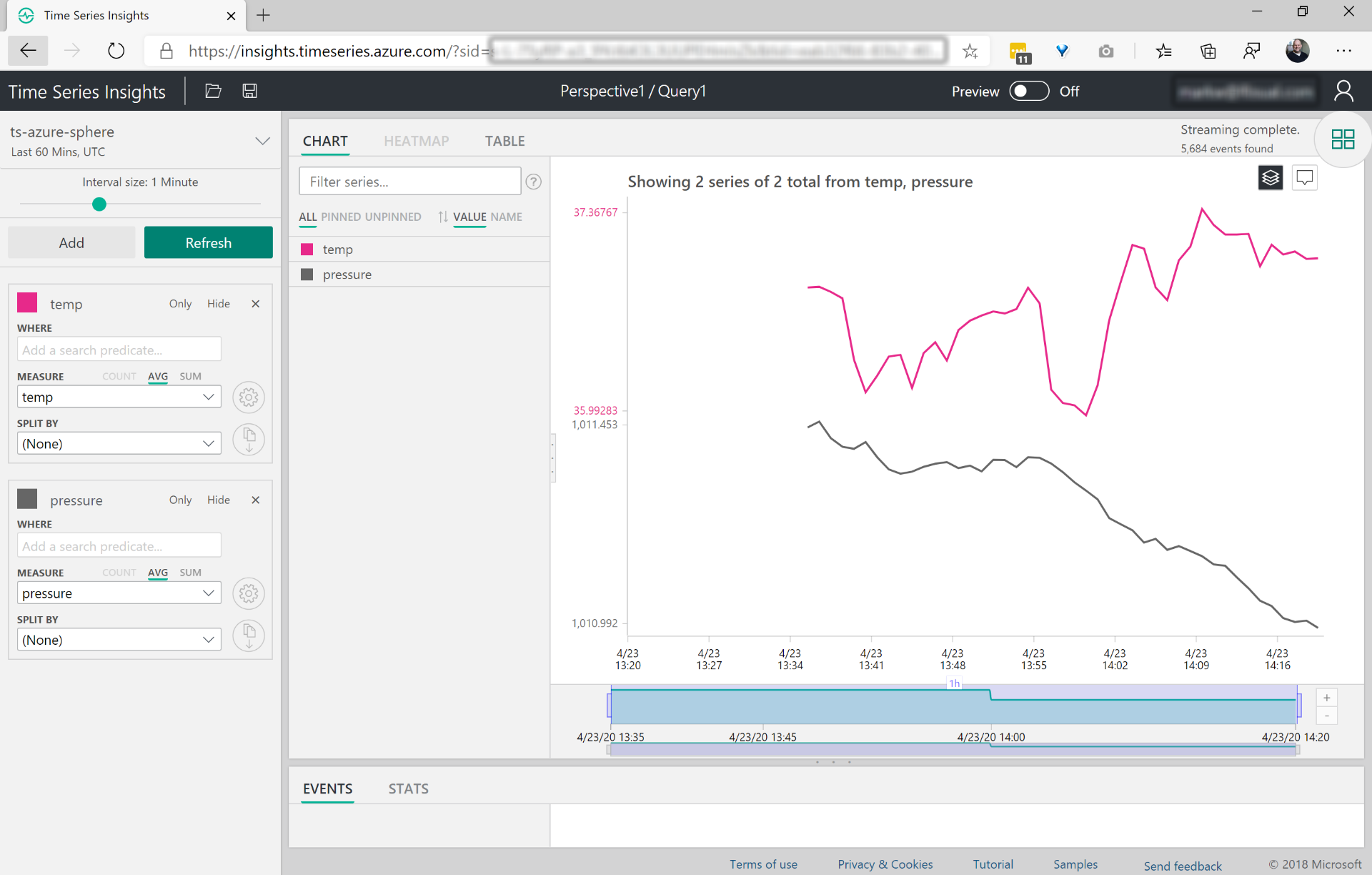

- Add events inside the Time Series Insights to view the sensor readings from the Azure Sphere device.

Time Series Insights can get expensive for a simple test project without any real value. I could quickly have used my entire month’s Azure credits, so I deleted the resource group used to contain my Azure Sphere resources before moving on to the next section…

Integration with Azure IoT Central

Azure IoT Central is a hosted IoT platform. It is intended to take away much of the underlying complexity and let organisations quickly build IoT solutions using just a web interface.

Following part 3 in Brian Willess’ Azure Sphere series, I was able to get my device working with IoT Central, both using the web interface to control the LEDs on the board and also pushing sensor data to a dashboard. As before, these are the basic steps and refer to Brian Willess’ post for full details:

- Create a new IoT Central application.

- Select or create a template:

- Use the IoT device custom template.

- Either import an existing capability model (this was mine) or create one, adding interfaces (sensors, buttons, information, etc.) and capabilities.

- Create custom views – e.g. for LED device control or for device metrics.

- Publish the template.

- Configure DPS:

- Download a certificate from the Azure Sphere tenant using

azsphere tenant download-CA-certificate --output CAcertificate.cerat the Azure Sphere Developer Command Prompt. (This is the same certificate already generated for the IoT Hub example.) - Upload the certificate to IoT Central and generate a validation code, then use

azsphere tenant download-validation-certificate --output validation.cer --verificationcode verificationcodeto apply this. - Upload the new validation certificate.

- Download a certificate from the Azure Sphere tenant using

- Create a non-simulated device in IoT Central:

- Run

ShowIoTCentralConfig.exe, providing the ID Scope and a shared access signature key for the device (both obtained from the Device Connection details in IoT Central) and the Device ID (from the device created in the previous step). Make a note of details provided by the tool - Configure the application source code to connect to IoT Central:

- Uncomment

#define IOT_CENTRAL_APPLICATIONin build_options.h. - Update the

CmdArgsline in app_manifest.json with the ID Scope obtained from the Device Connection details in IoT Central. - Update the

AllowedConnectionsline in app_manifest.json with the FQDNs obtained by runningShowIoTCentralConfig.exe. - Update the

DeviceAuthenticationline in app_manifest.json with the Azure Sphere tenant ID (which may be obtained usingazsphere tenant show-selectedat the Azure Sphere Developer Command Prompt).

- Uncomment

- Build and run the application.

- Associate the Azure Sphere device with IoT Central (the device created previously was just a “dummy” to get some configuration details). IoT Central should have found the real device but it will need to be “migrated” to the appropriate device group to pick up the template created earlier.

- Open the device and enjoy the data!

It’s amazing how much fun turning some LEDs on/off from #Azure IoT Central can be #AzureSphere! pic.twitter.com/3YCETiOTZm

— Mark Wilson ???? (@markwilsonit) April 23, 2020

I hadn’t expected IoT Central to cost much (if anything, because the first two devices are free) but I think the app I’m using is pretty chatty so I’m being charged for extra messages (30,000 a month sounds like a lot until you realise it’s only around 40 an hour on a device that’s sending frequent updates to/from the service). It seems to be costing just under £1/day (from a pool of credits) so I won’t be worrying too much!

What’s next for my Azure Sphere device?

Having used Brian Willess’ posts at Element 14 to get an idea of how this should work, I think my next step is to buy some external sensors and write some real code to monitor something real… unfortunately the sensors I want are on back order until the summer but watch this space!

Unfortunately, before we got to hear the big man speak, we had to listen to the warm-up act –

Unfortunately, before we got to hear the big man speak, we had to listen to the warm-up act –