One of the most valuable personal development activities in my early career was a trip to the Microsoft TechEd conference in Amsterdam. I learned a lot – not just technically but about making the most of events to gather information, make new industry contacts, and generally top up my knowledge. Indeed, even as a relatively junior consultant, I found that dipping into multiple topics for an hour or so gave me a really good grounding to discover more (or just enough to know something about the topic) – far more so than an instructor-led training course.

Over the years, I attended further “TechEd”s in Amsterdam, Barcelona and Berlin. I fought off the “oh Mark’s on another jolly” comments by sharing information – incidentally, conference attendance is no “jolly” – there may be drinks and even parties but those are after long days of serious mental cramming, often on top of broken sleep in a cheap hotel miles from the conference centre.

Microsoft TechEd is no more. Over the years, as the budgets were cut, the standard of the conference dropped and in the UK we had a local event called Future Decoded. I attended several of these – and it was at Future Decoded that I discovered risual – where I’ve been working for almost four years now.

Now, Future Decoded has also fallen by the wayside and Microsoft has focused on taking it’s principal technical conference – Microsoft Ignite – on tour, delivering global content locally.

So, a few weeks ago, I found myself at the ExCeL conference centre in London’s Docklands, looking forward to a couple of days at “Microsoft Ignite | The Tour: London”.

Conference format

Just like TechEd, and at Future Decoded (in the days before I had to use my time between keynotes on stand duty!), the event was broken up into tracks with sessions lasting around an hour. Because that was an hour of content (and Microsoft event talks are often scheduled as an hour, plus 15 minutes Q&A), it was pretty intense, and opportunities to ask questions were generally limited to trying to grab the speaker after their talk, or at the “Ask the Experts” stands in the main hall.

One difference to Microsoft conferences I’ve previously attended was the lack of “level 400” sessions: every session I saw was level 100-300 (mostly 200/300). That’s fine – that’s the level of content I would expect but there may be some who are looking for more detail. If it’s detail you’re after then Ignite doesn’t seem to be the place.

Also, I noticed that Day 2 had fewer delegates and lacked some of the “hype” from Day 1: whereas the Day 1 welcome talk was over-subscribed, the Day 2 equivalent was almost empty and light on content (not even giving airtime to the conference sponsors). Nevertheless, it was easy to get around the venue (apart from a couple of pinch points).

Personal highlights

I managed to cover 11 topics over two days (plus a fair amount of networking). The track format of the event was intended to let a delegate follow a complete learning path but, as someone who’s a generalist (that’s what Architects have to be), I spread myself around to cover:

- Dealing with a massive onset of data ingestion (

Jeramiah Dooley/@jdooley_clt). - Enterprise network connectivity in a cloud-first world (Paul Collinge/@pcollingemsft).

- Building a world without passwords.

- Discovering Azure Tooling and Utilities (Simona Cotin/@simona_cotin).

- Selecting the right data storage strategy for your cloud application (

Jeramiah Dooley/@jdooley_clt). - Governance in Azure (Sam Cogan/@samcogan).

- Planning and implementing hybrid network connectivity (Thomas Maurer/@ThomasMaurer).

- Transform device management with Windows Autopilot, Intune and OneDrive (Michael Niehaus/@mniehaus and Mizanur Rahman).

- Maintaining your hybrid environment (Niel Peterson/@nepeters).

- Windows Server 2019 Deep Dive (Jeff Woolsey/@wsv_guy).

- Consolidating infrastructure with the Azure Kubernetes Service (Erik St Martin/@erikstmartin).

In the past, I’d have written a blog post for each topic. I was going to say that I simply don’t have the time to do that these days but by the time I’d finished writing this post, I thought maybe I could have split it up a bit more! Regardless, here are some snippets of information from my time at Microsoft Ignite | The Tour: London. There’s more information in the slide decks – which are available for download, along with the content for the many sessions I didn’t attend.

Data ingestion

Ingesting data can be broken into:

- Real-time ingestion.

- Real-time analysis (see trends as they happen – and make changes to create a competitive differentiator).

- Producing actions as patterns emerge.

- Automating reactions in external services.

- Making data consumable (in whatever form people need to use it).

Azure has many services to assist with this – take a look at IoT Hub, Azure Event Hubs, Azure Databricks and more.

Enterprise network connectivity for the cloud

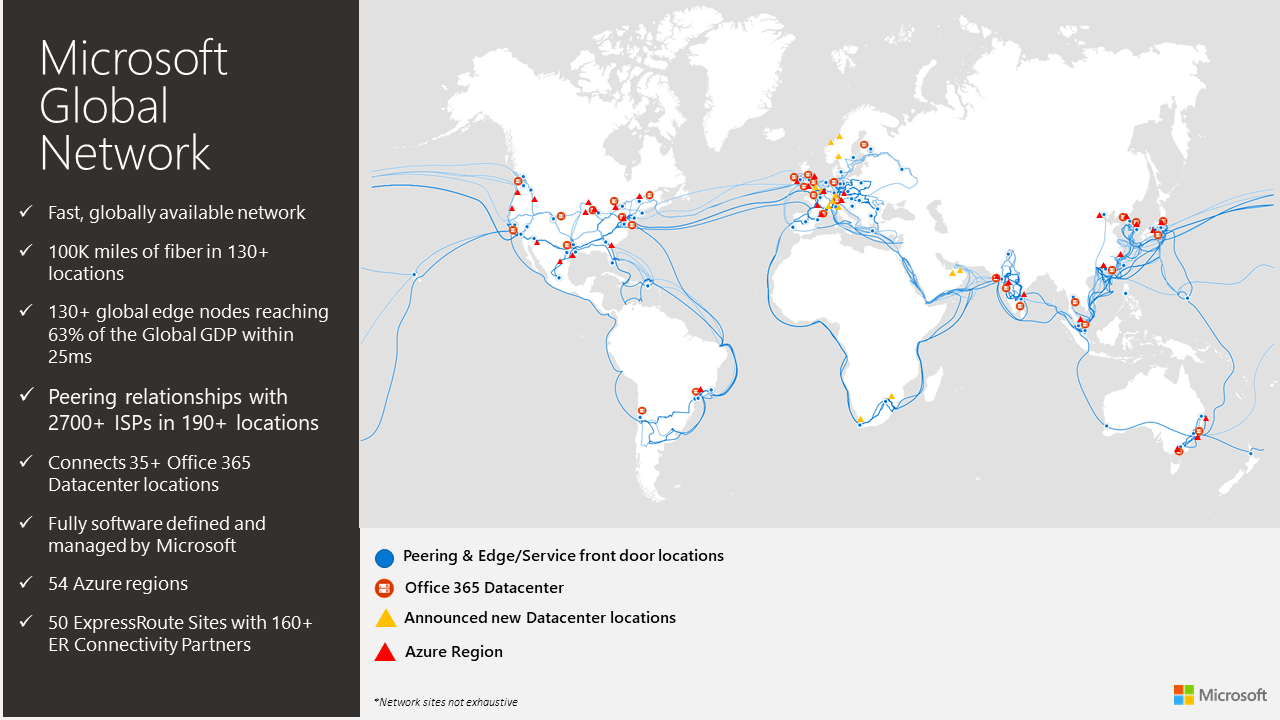

Cloud traffic is increasing whilst traffic that remains internal to the corporate network is in decline. Traditional management approaches are no longer fit for purpose.

Office applications use multiple persistent connections – this causes challenges for proxy servers which generally degrade the Office 365 user experience. Remediation is possible, with:

- Differentiated traffic – follow Microsoft advice to manage known endpoints, including the Office 365 IP address and URL web service.

- Let Microsoft route traffic (data is in a region, not a place). Use DNS resolution to egress connections close to the user (a list of all Microsoft peering locations is available). Optimise the route length and avoid hairpins.

- Assess network security using application-level security, reducing IP ranges and ports and evaluating the service to see if some activities can be performed in Office 365, rather than at the network edge (e.g. DLP, AV scanning).

For Azure:

- Azure ExpressRoute is a connection to the edge of the Microsoft global backbone (not to a datacentre). It offers 2 lines for resilience and two peering types at the gateway – private and public (Microsoft) peering.

- Azure Virtual WAN can be used to build a hub for a region and to connect sites.

- Replace branch office routers with software-defined (

SDWAN) devices and break out where appropriate.

Real-world demo of local and direct network egress cf. centralised approach #Office365 #MSIgniteTheTour pic.twitter.com/1wuVNegXQs

— Mark Wilson ???? (@markwilsonit) February 26, 2019

Passwordless authentication

Basically, there are three options:

- Windows Hello.

- Microsoft Authenticator.

- FIDO2 Keys.

Azure tooling and utilities

Useful resources include:

- Azure Portal:

- Create dashboards to optimise the experience.

- Visual Studio Code:

- An extensible code editor with a marketplace.

- Edit, debug and run code including native integration with source control.

- Rich coding experience including Intellisense.

- Interactive Playground to learn new skills (find out more – along with other tips and tricks in this article).

- Azure Command Line Interface (CLI) and Azure PowerShell:

- CLI is cross-platform (Mac/Windows/Linux) and can be run locally or in the Cloud Shell.

- There are PowerShell Modules for Azure.

- Azure Cloud Shell:

- Bash or PowerShell.

- Access via >_ icon in the Azure Portal – or go to shell.azure.com.

- Uses an underlying storage account so can access files from other locations.

- Can even open an instance of Visual Studio Code inside the Cloud Shell.

- Azure Resource Manager (ARM) Templates:

- JSON format templates, supporting parameters, variables, resources and objects.

Selecting data storage for a cloud application

What to use? It depends! Classify data by:

- Type of data:

- Structured (fits into a table)

- Semi-structured (may fit in a table but may also use outside metadata, external tables, etc.)

- Unstructured (documents, images, videos, etc.)

- Properties of the data:

- Volume (how much)

- Velocity (change rate)

- Variety (sources, types, etc.)

| Item | Type | Volume | Velocity | Variety |

| Product catalogue | Semi-structured | High | Low | Low |

| Product photos | Unstructured | High | Low | Low |

| Sales data | Semi-structured | Medium | High | High |

How to match data to storage:

- Storage-driven: build apps on what you have.

- Cloud-driven: deploy to the storage that makes sense.

- Function-driven: build what you need; storage comes with it.

Governance in Azure

It’s important to understand what’s running in an Azure subscription – consider cost, security and compliance:

- Review (and set a baseline):

- Tools

include: Resource Graph; Cost Management; Security Center; Secure Score.

- Tools

- Organise (housekeeping to create a subscription hierarchy, classify subscriptions and resources, and apply access rights consistently):

- Tools include: Management Groups; Tags; RBAC;

- Audit:

- Make changes to implement governance without impacting people/work. Develop policies, apply budgets and audit the impact of the policies.

- Tools include: Cost Management; Azure Policy.

- Enforce

- Change policies to enforcement, add resolution actions and enforce budgets.

- Consider what will happen for non-compliance?

- Tools

include: Azure Policy; Cost Management; Azure Blueprints.

- (Loop back to review)

- Have we achieved what we wanted to?

- Understand what is being spent and why.

- Know that only approved resources are deployed.

- Be sure of adhering to security practices.

- Opportunities for further improvement.

Planning and implementing hybrid network connectivity

Moving to the cloud allows for fast deployment but planning is just as important as it ever was. Meanwhile, startups can be cloud-only but most established organisations have some legacy and need to keep some workloads on-premises, with secure and reliable hybrid communication.

Considerations include:

- Extension of the internal protected network:

- Should workloads in Azure only be accessible from the Internal network?

- Are Azure-hosted workloads restricted from accessing the Internet?

- Should Azure have a single entry and egress point?

- Can the connection traverse the public Internet (compliance/regulation)?

- IP addressing:

- Existing addresses on-premises; public IP addresses.

- Namespaces and name resolution.

- Multiple regions:

- Where are the users (multiple on-premises sites); where are the workloads (multiple Azure regions); how will connectivity work (should each site have its own connectivity)?

- Azure virtual networks:

- Form an isolated boundary with secure communications.

- Azure-assigned IP addresses (no need for a DHCP server).

- Segmented with subnets.

- Network Security Groups (NSGs) create boundaries around subnets.

- Connectivity:

- Site to site (S2S) VPNs at up to 1Gbps

- Encrypted traffic over the public Internet to the GatewaySubnet in Azure, which hosts VPN Gateway VMs.

- 99.9% SLA on the Gateway in Azure (not the connection).

- Don’t deploy production workloads on the GatewaySubnet; /26, /27 or /28 subnets recommended; don’t apply NSGs to the GatewaySubnet – i.e. let Azure manage it.

- Dedicated connections (Azure ExpressRoute): private connection at up to 10Gbps to Azure with:

- Private peering (to access Azure).

- Microsoft peering (for Office 365, Dynamics 365 and Azure public IPs).

- 99.9% SLA on the entire connection.

- Other connectivity services:

- Azure ExpressRoute Direct: a 100Gbps direct connection to Azure.

- Azure ExpressRoute Global Reach: using the Microsoft network to connect multiple local on-premises locations.

- Azure Virtual WAN: branch to branch and branch to Azure connectivity with software-defined networks.

- Site to site (S2S) VPNs at up to 1Gbps

- Hybrid networking technologies:

- Azure network adapter for Windows Server 2019 is a point to site (P2S) VPN solution to connect a Windows Server to an Azure virtual network.

- Azure File Sync offers tiered storage for Windows Server with Azure file storage and rapid disaster recovery. Can be thought of as “OneDrive for servers”.

Modern Device Management (Autopilot, Intune and OneDrive)

The old way of managing PC builds:

- Build an image with customisations and drivers

- Deploy to a new computer, overwriting what was on it

- Expensive – and the device has a perfectly good OS – time-consuming

Instead, how about:

- Unbox PC

- Transform with minimal user interaction

- Device is ready for productive use

The transformation is:

- Take OEM-optimised Windows 10:

- Windows 10 Pro and drivers.

- Clean OS.

- Plus software, settings, updates, features, user data (with OneDrive for Business).

- Ready for productive use.

The goal is to reduce the overall cost of deploying devices. Ship to a user with half a page of instructions…

Autopilot deployment is cloud driven and will eventually be centralised through Intune:

- Register device:

- From OEM or Channel (manufacturer, model and serial number).

- Automatically (existing Intune-managed devices).

- Manually using a PowerShell script to generate a CSV file with serial number and hardware hash, which is then uploaded to the Intune portal.

- Assign Autopilot profile:

- Use Azure AD Groups to assign/target.

- The profile includes settings such as deployment mode, BitLocker encryption, device naming, out of box experience (OOBE).

- An Azure AD device object is created for each imported Autopilot device.

- Deploy:

- Needs Azure AD Premium P1/P2

- Scenarios include:

- User-driven with Azure AD:

- Boot to OOBE, choose language, locale, keyboard and provide credentials.

- The device is joined to Azure AD, enrolled to Intune and policies are applied.

- User signs on and user-assigned items from Intune policy are applied.

- Once the desktop loads, everything is present, including file links in OneDrive) – time depends on the software being pushed.

- Self-deploying (e.g. kiosk, digital signage):

- No credentials required; device authenticates with Azure AD using TPM 2.0.

- User-driven with hybrid Azure AD join:

- Requires Offline Domain Join Connector to create AD DS computer account.

- Device connected to the corporate network (in order to access AD DS), registered with Autopilot, then as before.

- Sign on to Azure AD and then to AD DS during deployment. If they use the same UPN then it makes things simple for users!

- Autopilot for existing devices (Windows 7 to 10 upgrades):

- Backup data in advance (e.g. with OneDrive)

- Deploy generic Windows 10.

- Run Autopilot user-driven mode (can’t harvest hardware hashes in Windows 7 so use a JSON config file in the image – the offline equivalent of a profile. Intune will ignore unknown device and Autopilot will use the file instead; after deployment of Windows 10, Intune will notice a PC in the group and apply the profile so it will work if the PC is reset in future).

- User-driven with Azure AD:

Autopilot roadmap (1903) includes:

- “White glove” pre-provisioning for end users: QR code to track, print welcome letter and shipping label!

- Enrolment status page (ESP) improvements.

- Cortana voiceover disabled on OOBE.

- Self-updating Autopilot (update Autopilot without waiting to update Windows).

Windows #Autopilot roadmap #MSIgniteTheTour – some great enhancements coming for #Windows10 1903 – “white glove” deployments sound particularly useful pic.twitter.com/iKlsrSJFZk

— Mark Wilson ???? (@markwilsonit) February 27, 2019

Maintaining your hybrid environment

Common requirements in an IaaS environment include wanting to use a policy-based configuration with a single management and monitoring solution and auto-remediation.

Azure Automation allows configuration and inventory; monitoring and insights; and response and automation. The Azure Portal provides a single pane of glass for hybrid management (Windows or Linux; any cloud or on-premises).

For configuration and state management, use Azure Automation State Configuration (built on PowerShell Desired State Configuration).

Inventory can be managed with Log Analytics extensions for Windows or Linux. An Azure Monitoring Agent is available for on-premises or other clouds. Inventory is not instant though – can take 3-10 minutes for Log Analytics to ingest the data. Changes can be visualised (for state tracking purposes) in the Azure Portal.

Azure Monitor and Log Analytics can be used for data-driven insights, unified monitoring and workflow integration.

Responding to alerts can be achieved with Azure Automation Runbooks, which store scripts in Azure and run them in Azure. Scripts can use PowerShell or Python so support both Windows and Linux). A webhook can be triggered with and HTTP POST request. A Hybrid runbook worker can be used to run on-premises or in another cloud.

It’s possible to use the Azure VM agent to run a command on a VM from Azure portal without logging in!

Windows Server 2019

Windows Server strategy starts with Azure. Windows Server 2019 is focused on:

- Hybrid:

- Backup/connect/replicate VMs.

- Storage Migration Service to migrate unstructured data into Azure IaaS or another on-premises location (from 2003+ to 2016/19).

- Inventory (interrogate storage, network security, SMB shares and data).

- Transfer (pairings of source and destination), including ACLs, users and groups. Details are logged in a CSV file.

- Cutover (make the new server look like the old one – same name and IP address). Validate before cutover – ensure everything will be OK. Read-only process (except change of name and IP at the end for the old server).

- Azure File Sync: centralise file storage in Azure and transform existing file servers into hot caches of data.

- Azure Network Adapter to connect servers directly to Azure networks (see above).

- Hyper-converged infrastructure (HCI):

- The server market is still growing and is increasingly SSD-based.

- Traditional rack looked like SAN, storage fabric, hypervisors, appliances (e.g. load balancer) and top of rack Ethernet switches.

- Now we use standard x86 servers with local drives and software-defined everything. Manage with Admin Center in Windows Server (see below).

- Windows Server now has support for persistent memory: DIMM-based; still there after a power-cycle.

- The Windows Server Software Defined (WSSD) programme is the Microsoft approach to software-defined infrastructure.

- Security: shielded VMs for Linux (VM as a black box, even for an administrator); integrated Windows Defender ATP; Exploit Guard; System Guard Runtime.

- Application innovation: semi-annual updates are designed for containers. Windows Server 2019 is the latest LTSC channel so it has the 1709/1803 additions:

- Enable developers and IT Pros to create cloud-native apps and modernise traditional apps using containers and micro services.

- Linux containers on Windows host.

- Service Fabric and Kubernetes for container orchestration.

- Windows subsystem for Linux.

- Optimised images for server core and nano server.

Windows Admin Center is core to the future of Windows Server management and, because it’s based on remote management, servers can be core or full installations – even containers (logs and console). Download from http://aka.ms/WACDownload

- 50MB download, no need for a server. Runs in a browser and is included in Windows/Windows Server licence

- Runs on a layer of PowerShell. Use the >_ icon to see the raw PowerShell used by Admin Center (copy and paste to use elsewhere).

- Extensible platform.

What’s next?

- More cloud integration

- Update cadence is:

- Insider builds every 2 weeks.

- Semi-annual channel every 6 months (specifically for containers):

- 1709/1803/1809/19xx.

- Long-term servicing channel

- Every 2-3 years.

- 2016, 2019 (in September 2018), etc.

Windows Server 2008 and 2008 R2 reach the end of support in January 2020 but customers can move Windows Server 2008/2008 R2 servers to Azure and get 3 years of security updates for free (on-premises support is chargeable).

Further reading: What’s New in Windows Server 2019.

Containers/Azure Kubernetes Service

Containers:

- Are fully-packaged applications that use a standard image format for better resource isolation and utilisation.

- Are ready to deploy via an API call.

- Are not Virtual machines (for Linux).

- Do not use hardware virtualisation.

- Offer no hard security boundary (for Linux).

- Can be more cost effective/reliable.

- Have no GUI.

Kubernetes is:

- An open source system for auto-deployment, scaling and management of containerized apps.

- Container Orchestrator to manage scheduling; affinity/anti-affinity; health monitoring; failover; scaling; networking; service discovery.

- Modular and pluggable.

- Self-healing.

- Designed by Google based on a system they use to run billions of containers per week.

- Described in “Phippy goes to the zoo”.

Azure container offers include:

- Azure Container Instances (ACI): containers on demand (Linux or Windows) with no need to provision VMs or clusters; per-second billing; integration with other Azure services; a public IP; persistent storage.

- Azure App Service for Linux: a fully-managed PaaS for containers including workflows and advanced features for web applications.

- Azure Kubernetes Service (AKS): a managed Kubernetes offering.

Wrap-up

So, there you have it. An extremely long blog post with some highlights from my attendance at Microsoft Ignite | The Tour: London. It’s taken a while to write up so I hope the notes are useful to someone else!

Fantastic couple of days at #MSIgniteTheTour (although quieter/smaller than I expected). Thanks to all the speakers – it’s been great to dip into such a wide variety of topics. Now, back to the day job (and normal tweeting levels!) pic.twitter.com/NtTDXOG22h

— Mark Wilson ???? (@markwilsonit) February 27, 2019

A few weeks ago,

A few weeks ago,