You probably noticed, but Microsoft held its Ignite conference in Chicago last week. As is normal now, there’s a “Book of News” for all the major announcements and the keynotes are available for online review. But there’s an awful lot to sort through. Luckily, CNET created a 15 minute summary of Satya Nadella’s keynote:

Major announcements from Ignite 2024

Last year, I wrote about how it was clear that Microsoft is all about Artificial Intelligence (AI) and this year is no different. The rest of this post focuses on the main announcements with a little bit of analysis from yours truly on what the implications might be.

| Announcement | What it means | Find out more |

|---|---|---|

| Investing in security, particularly around Purview. | Data governance is of central importance in the age of AI. Microsoft has announced updates to prevent oversharing, risky use of AI, and misuse of protected materials. With one of the major concerns being accidental access to badly-secured information, this will be an important development, for those that make use of it. | https://aka.ms/Ignite2024Security/ |

| Zero Day Quest | A new hacking event with $4m in rewards. Bound to grab headlines! | https://aka.ms/ZeroDayQuest |

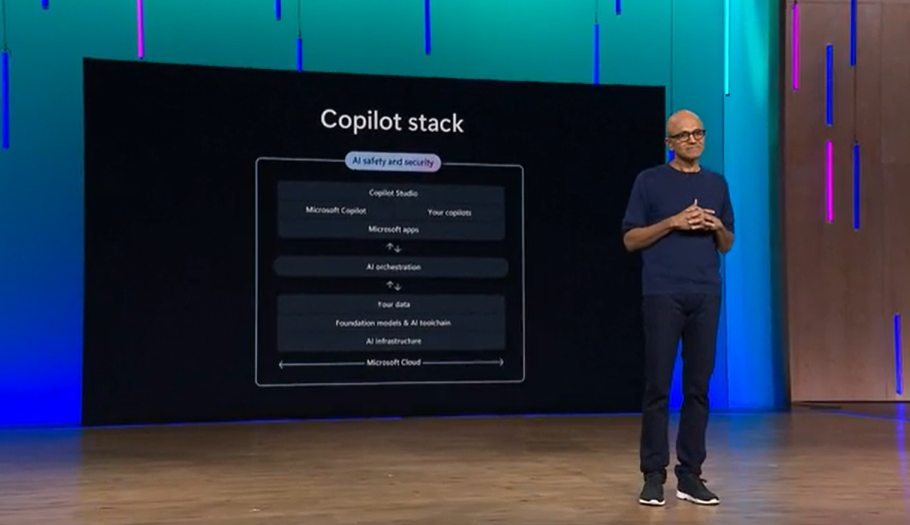

| Copilot as the UI for AI | If there’s one thing to take away from Ignite it’s that Microsoft sees Copilot as the UI for AI (it becomes the organising layer for work and how it gets done). 1. Every employee will have a Copilot that knows them and their work – enhancing productivity and saving time. 2. There will be agents to automate business processes. 3. And the IT dept has a control system to manage secure and measure the impact of Copilot. | |

| Copilot Actions | Copilot Actions are intended to reduce the time spent on repetitive everyday tasks – they were described as “Outlook Rules for the age of AI” (but for the entire Microsoft 365 ecosystem). I’m sceptical on these but willing to be convinced. Let’s see how well they work in practice. | https://aka.ms/CopilotActions |

| Copilot Agents | If 2023-4 were about generative AI, “agentic” computing is the term for 2025. There will be Agents within the context of a team – teammates scoped to specific roles – e.g. a facilitator to keep meeting focus in Teams and manage follow-up/action items; a Project Management Agent in Planner – to create a plan and oversee task assignments/content creation; self-service agents to provide information – augmenting HR and IT departments to answer questions and complete tasks; and a SharePoint Agent per site – providing instant access to real-time information. Organisations can create their own agents using Copilot Studio – and the aim is that it should be as easy to create an Agent as it is to create a document. | https://aka.ms/AgentsInM365 |

| Copilot Analytics | Answering criticism about the cost of licensing Copilot, Microsoft is providing analytics to correlate usage to a business metric. Organisations will be able to tune their Copilot usage to business KPIs and show how Copilot usage is translating into business outcomes. | https://aka.ms/CopilotAnalytics |

| Mobile Application Management on Windows 365 | Microsoft is clearly keen to push its “cloud PC” concept – Windows 365 – with new applications so that users can access a secure computing environment from iOS and Android devices. Having spent years working to bring clients away from expensive thin client infrastructure and back to properly managed “thick clients”, I’m not convinced about the “Cloud PC”, but maybe I’m just an old man shouting at the clouds… | https://aka.ms/WindowsAppAndroid |

| Windows 365 Link | Windows 365 Link is a simple, secure purpose built access device (aka a thin PC). It’s admin-less and password-less with security configurations enabled by default that cannot be turned off. The aim is that users can connect directly to their cloud PC with no data left locally (available from April 2025). If you’re going to invest in this approach, then it could be a useful device – but it’s not a Microsoft version of a Mac Mini – it’s all about the cloud. | https://aka.ms/Windows365Link |

| Windows Resiliency Initiative | Does anyone remember “Trustworthy Computing”? Well, the Windows Resiliency Initiative is the latest attempt to make Windows more secure and reliable. It includes new features like Windows Hotpatch to apply critical updates without a restart across an entire IT estate. | https://aka.ms/WinWithSecurity |

| Azure Local | A rebranding and expansion of Azure Stack to bring Azure Arc to the edge. Organisations can run mission critical workloads in distributed locations. | https://aka.ms/AzureLocal |

| Azure Integrated HSM | Microsoft’s first in-house security chip hardens key management without impacting performance. This will be part of every new server deployed on Azure starting next year. | https://aka.ms/AzureIntegratedHSM |

| Azure Boost | Microsoft’s first in-house data processing unit (DPU) is designed to accelerate data-centric workloads. It can run cloud storage workloads with 3x less power and 4x the performance. | https://aka.ms/AzureBoostDPU |

| Preview NVIDIA Blackwall AI infrastructure on Azure | By this point, even I’m yawning, but this is a fantastically fast computing environment for optimised AI training workloads. It’s not really something that most of us will use. | https://aka.ms/NDGB200v6 |

| Azure HBv5 | Co-engineered with AMD, this was described as a new standard for high performance computing and cited as being up to 8 times faster than any other cloud VM. | https://aka.ms/AzureHBv5 |

| Fabric | SQL Server is coming natively to Fabric in the form of Microsoft Fabric Databases. The aim here is to simplify operational databases as Fabric already did for analytical requirements. It provides an enterprise data platform that serves all use cases, making use of open source formats in the Fabric OneLake data lake. I have to admit, it does sound very interesting, but there will undoubtedly be some nuances that I’ll leave to my data-focused colleagues. | https://aka.ms/Fabric |

| Azure AI Foundry | Described as a “first class application server for the AI age” – unifying all models, tooling, safety and monitoring into a single experience, integrated with development tools as a standalone SDK and a portal. 1800 models in the catalogue for model customisation and experimentation. | https://aka.ms/MaaSExperimentation https://aka.ms/CustomizationCollaborations |

| Azure AI Agent Service | Build, deploy and scale AI apps to automate business processes. Compared with Copilot Studio for a graphical approach, this provides a code-first approach for developers to create agents, grounded in data, wherever it is. | https://ai.azure.com/ |

| Other AI announcements | There will be AI reports and other management capabilities in Foundry, including including evaluation of models. Safety is important – with tools to build secure AI including PromptShield to detect/block manipulation of outputs and risk/safety evaluations for image content. | |

| Quantum Computing | This will be the buzzword that replaces AI in the coming years. Quantum is undoubtedly significant but it’s still highly experimental. Nevertheless, Microsoft is making progress in the Quantum arms race, with a the “World’s most powerful quantum computer” with 24 logical Qubits, double the previous record. | https://aka.ms/AQIgniteBlog |

Featured image: screenshots from the Microsoft Ignite keynote stream, under fair use for copyright purposes.